Dan Fowler over at the OKFN frictionless data chat posted a link to an interesting initiative: Kaggle Datasets.

Kaggle is a platform for learning about, teaching and collaborating on all things machine learning. Users upload data, write and share analysis/visualisation algorithms (called kernels) and participate in challenges. Members are encouraged to share their code with others and review and comment on their fellows’ work. Kaggle is, in a way, a platform for collaborative learning.

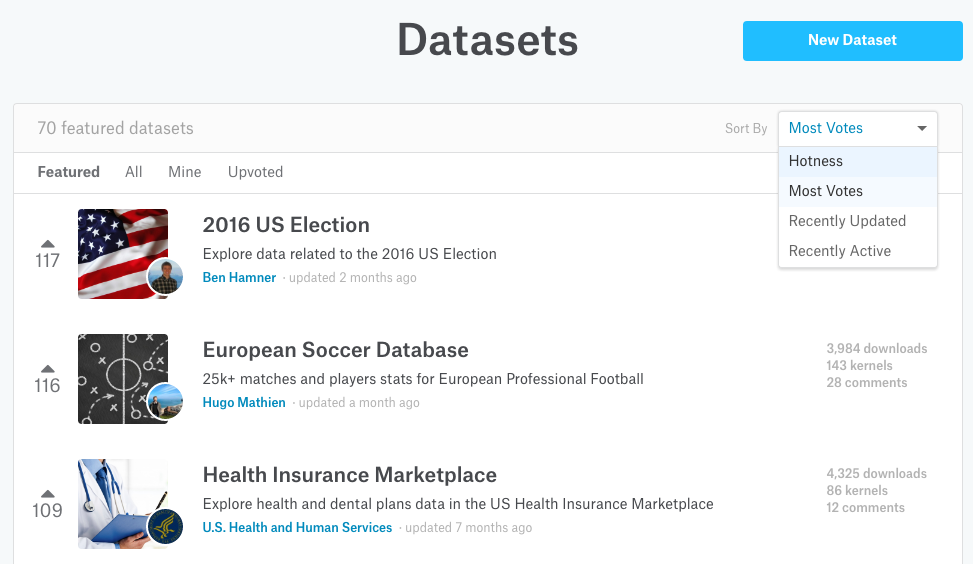

Datasets is a newly launched feature that adds collaboration functionalities to the hosted datasets. Users are able to upload, share, discuss and explore data by crafting and sharing kernels. Datasets is fairly unique in that it brings data, algorithms and users together in one place. Users can vote and comment on almost anything, making the kernels/datasets legible. Kernels run in the browser making them and the dataset they operate on extremely accessisble and actionable.

Kaggle’s Datasets bring to data (science) what GitHub brought to software: open frictionless collaboration.

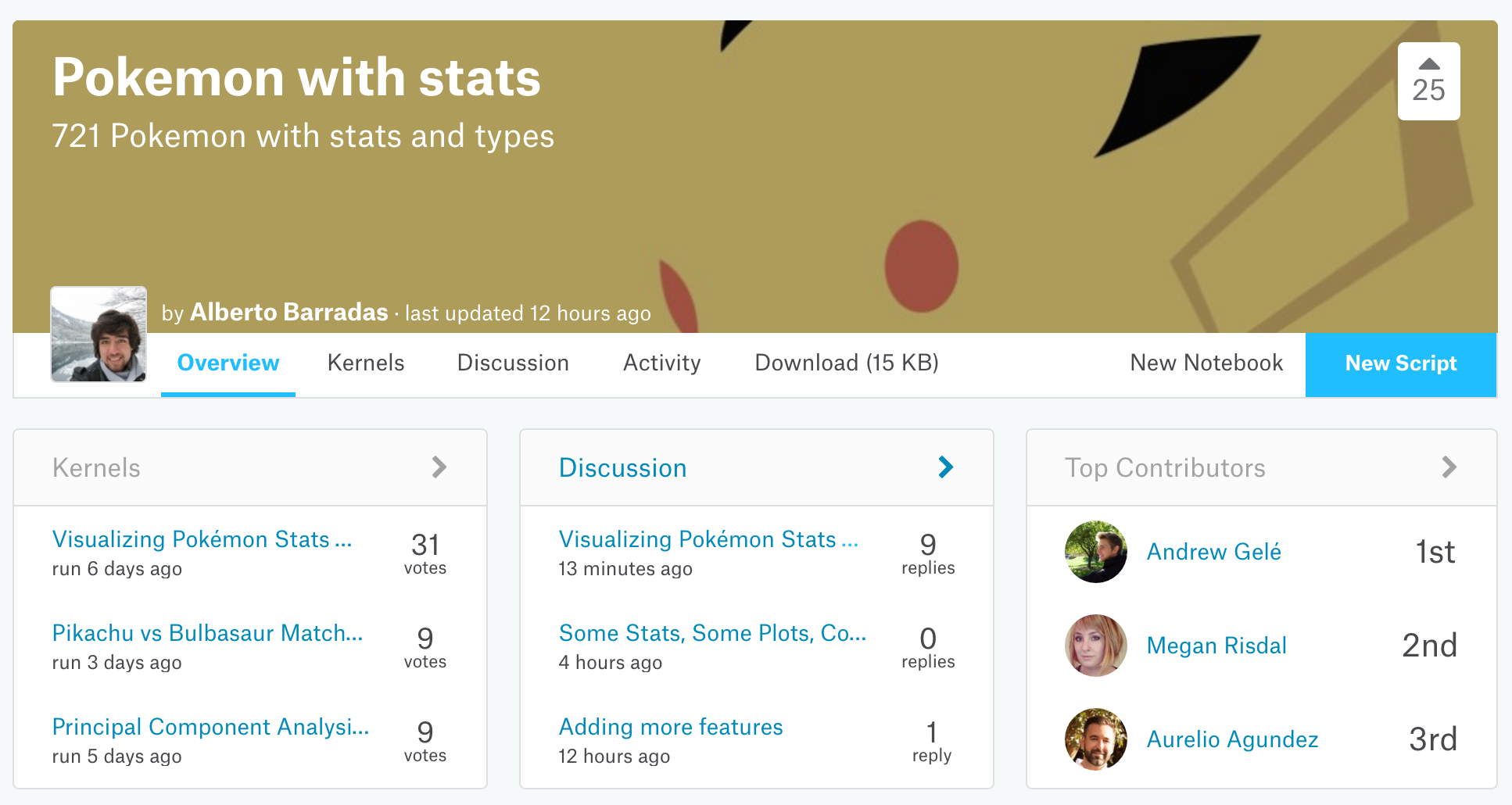

Each Kaggle dataset has a homepage that first shows the associated kernels, discussions and top contributors. Below that is an activity stream that shows everything there is to show, down to who ran which kernel version when. Only then does one arrive at the dataset’s description and preview.

The remarkable thing here is the focus on the execution, discussion and collaboration aspects of working with data. Contrast this with “traditional” data stores that put datasets and metadata first and foremost. In these platforms, collaboration aspects such as discussions, voting, knowledge exchange are often a badly implemented afterthought (if at all present).

This approach is salient as it shifts the emphasis from simply serving static datasets, to facilitating the collaborative creation of insightful visualisations and analyses a.k.a. information. This is, imho, a vital evolution step we (the open data community) need to make.

Datasets makes data, kernels and the collaboration process legibleI)I define legiblity as the ability to quickly and effectively determine whether a dataset/script is useful by looking at votes/stars, comments, forks, downloads, etc.: members can vote on datasets and kernels, and subscribe to each dataset’s discussion board. Discovering the most valuable/interesting artefacts becomes easy as the best ones simply bubble to the topII)This voting system (or any, to be honest) tackles a long standing problem of “traditional” data platforms: how do you (as an user) figure out if a dataset is worth the trouble?. Staying up-to-date is effortless.

Code and data are not all that is deemed important: the Top Contributors list is ordered by the number of submitted kernels as well as the number of comments made in discussions. Emphasizing the value of non-coding participation is significant as it potentionally increases the number of people willing to participate III)Code is, of course, not the only valuable thing in a healthy (data) ecosystem.

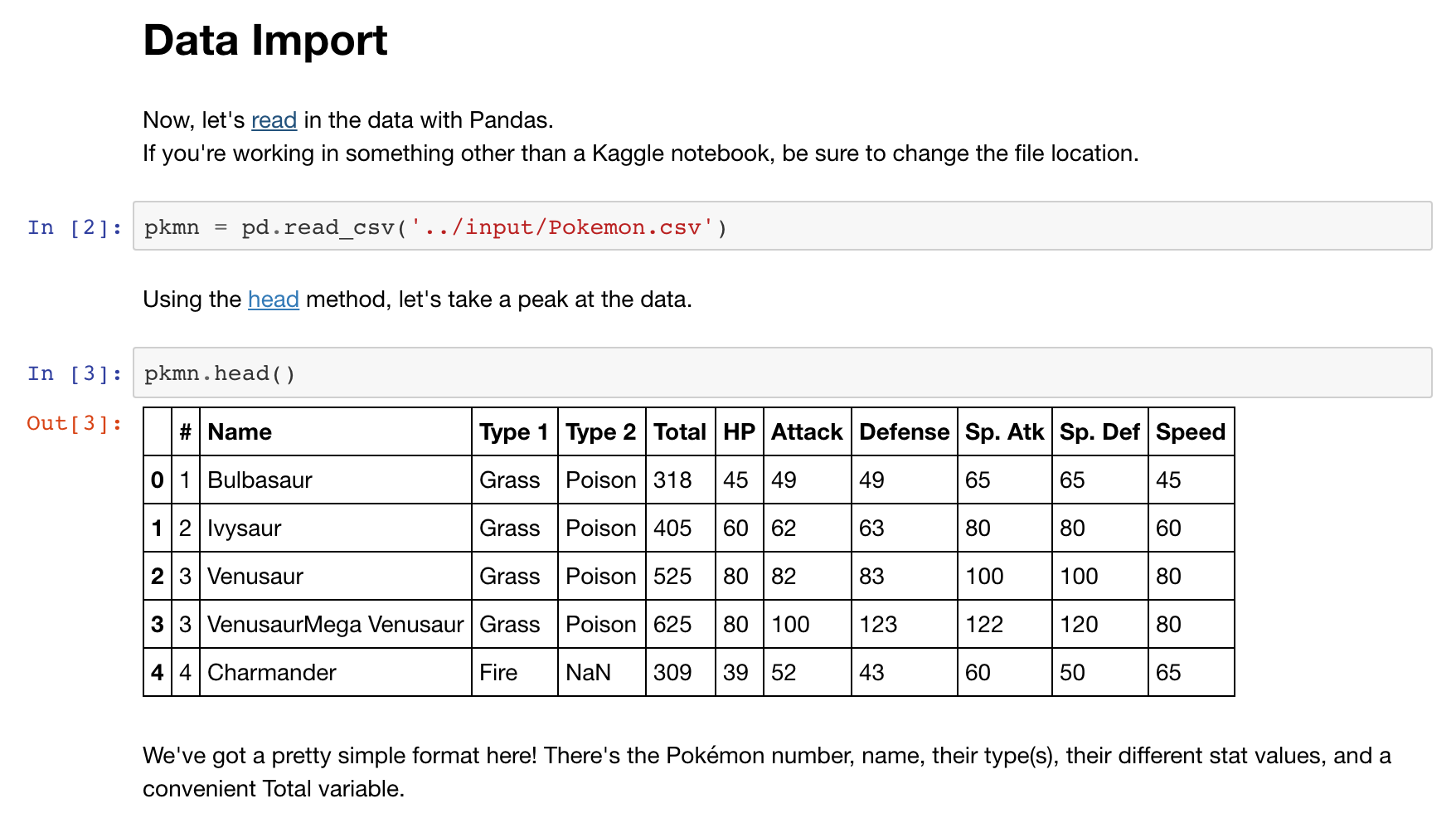

Clicking on a kernel opens its Notebook view that shows the code and its output. Having the executed code this close to the data makes the latter super accessible and actionable.

In the presence of a decent kernel one can simply scroll through the Notebook to quickly get a sense of the data. In adition to code, Notebooks carry text as well. This makes them an effective educational artifact: do you want to learn a certain data science library/technique? Simply scroll through an annotated Notebook to explore the most common operations.

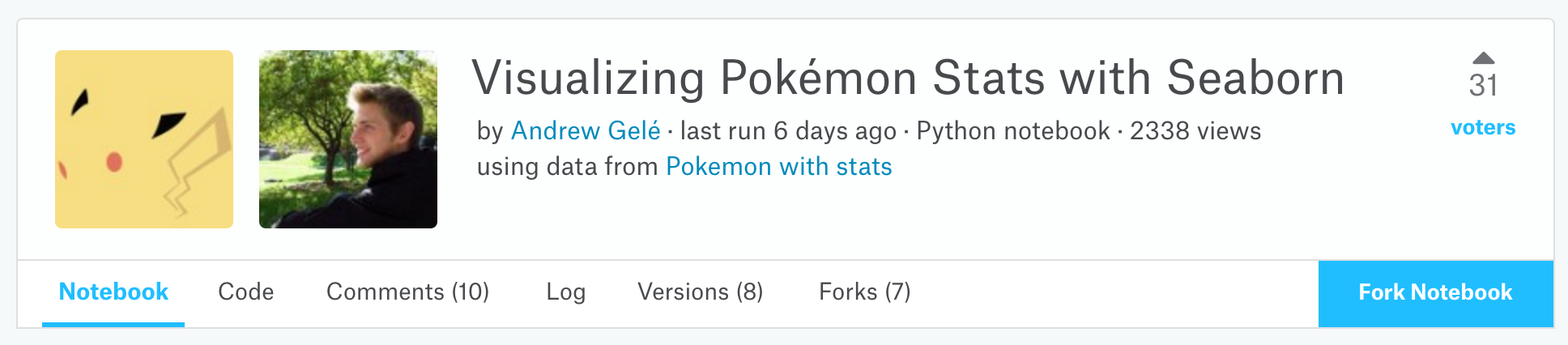

Kernels are, just like datasets, social and collaborative: you can leave comments, see who did what/when in the Log and expect a kernel’s history. Each kernel retains a clear and visible link between a dataset’s owner and the kernel’s author. It’s refreshing to see a data store that puts users instead of datasets on a pedestal.

Does a dataset/kernel look interesting? You can of course download the dataset and/or a kernel but – and this is brilliant – you can also fork a kernel! Once forked, you can edit, test and run it in your browser. This makes datasets/kernels extremely actionable as you don’t have to download/clone stuff, open editors/IDEs, install dependencies, populate databases, etc. And since each kernel is linked to a dataset it is automatically exposed to the dataset’s users. Talk about oversharing!

Brilliant: legible, actionable and collaborative data

By now I’m sure you can tell I’m all warm and fuzzy on the inside. Datasets is brilliant as

_ it makes data exploration extremely easy and frictionless: pick the highest rated dataset, scroll through one of its Notebooks and quickly judge if a dataset meets your requirments. Say goodbye to fruitless downloads!

_ the Kernels make the data actionable: you can interactively explore datasets in the browser before downloading; forking an existing kernel gives you a headstart on your analysis.

_ it makes data social and collaborative: oversharing, rating and discussing are built into the system; picking up someone’s work and sharing your own is effortless.

_ it is a user-driven infromation sharing platform.

In addition, Kaggle staff encourage data ownership. I clicked through a couple of newly uploaded datasets and saw numerous comments by Kagglers (?) encouraging the data owners to add descritions and example code to their uploads.

Exciting: collaborative government open data

The Datasets infrastructue and workflow are potentionally extremely transformative for government data. The short of it: Datasets approach is the exact opposite of most government open data stores. And that’s a good thing.

Imagine a world in which government data, the code used to explore and analyse it, and the users using it are concentrated in one place. Together with data owners, they cleanup and publicly discuss data, share insights and code thereby collaboratively making the data much easier and more pleasant to work all the while increasing its value.

Compare this utopian vision to the current state of many open data stores. Most are nothing more than huge lists of difficult to discern datasets that give you few cluess about: the quality and popularity (a.k.a. usefullness) of a dataset, who is currently using it and how, potential uses (a.k.a. inspiration), who the data owners are and how much they care, how quickly an error will be fixed, etc. Most data stores lack open communication channels: asking for assistance or voicing a suggestion often times boils down to writing an emailIV)Emailing about these things is a terrible sin I’ll probably write about in a separate post. In short: email hinders open knowledge exchange.. Add the need to write boilerplate code or setup basic analysis/visualisation environments for each dataset separately, and you end up with a burdensome and expensive (in terms of time and effort) experience.

My hunch is that use of government open data will increase if data stores become more legible, social, collaborative and user-driven.

But…

The big question is of course, will this work? Is it going to attract enough people to make it worthwileV)Datasets is, in a sense, a community platform. We all know how difficult these are to populate, right G+?? Are people going to care for their data in three, six, nine months from now, or is it going to turn into a dumping ground for one-off data experiments and lousy kernels?

Time will tell, but it’s refreshing and encouraging to see that folks are seriously experimenting with putting data users instead of data sets first and topping it off with the open collaboration spirit and workflow that is the norm in open source software.

Footnotes

| ↩I | I define legiblity as the ability to quickly and effectively determine whether a dataset/script is useful by looking at votes/stars, comments, forks, downloads, etc. |

|---|---|

| ↩II | This voting system (or any, to be honest) tackles a long standing problem of “traditional” data platforms: how do you (as an user) figure out if a dataset is worth the trouble? |

| ↩III | Code is, of course, not the only valuable thing in a healthy (data) ecosystem |

| ↩IV | Emailing about these things is a terrible sin I’ll probably write about in a separate post. In short: email hinders open knowledge exchange. |

| ↩V | Datasets is, in a sense, a community platform. We all know how difficult these are to populate, right G+? |